Table of Contents

- Introduction

- Prerequisites

- Step 1: Setting Up Azure Content Safety

- Step 2: Create the Copilot

- Step 3: Enable Generative Selection of Topics

- Step 4: Create Topics

- Test the Copilot

- Conclusion

Introduction

In this blog, we will create a Copilot that can accept user input, check if it’s safe using Azure Content Safety against four categories (self-harm, hate, violence, and sexual), and if deemed safe, query the United Nations website using generative actions to provide a response to the user. Follow the steps below to build this solution.

Prerequisites

Before we start, ensure you have the following:

- An Azure account

- Access to Azure Content Safety

- Access to Copilot Studio

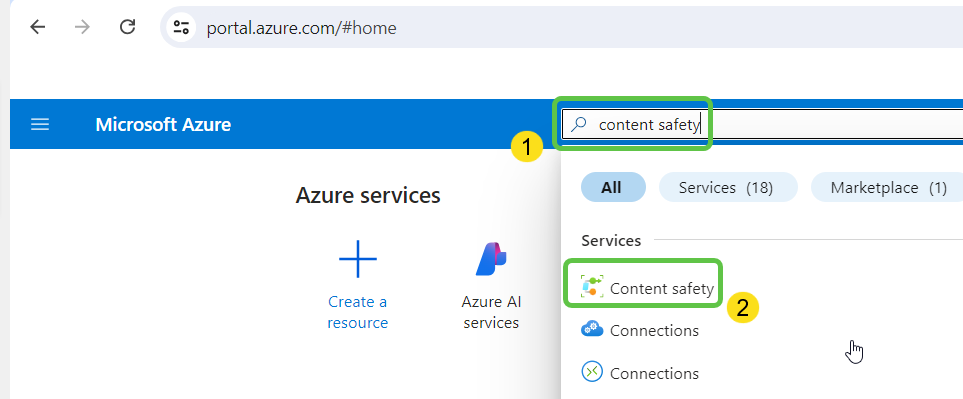

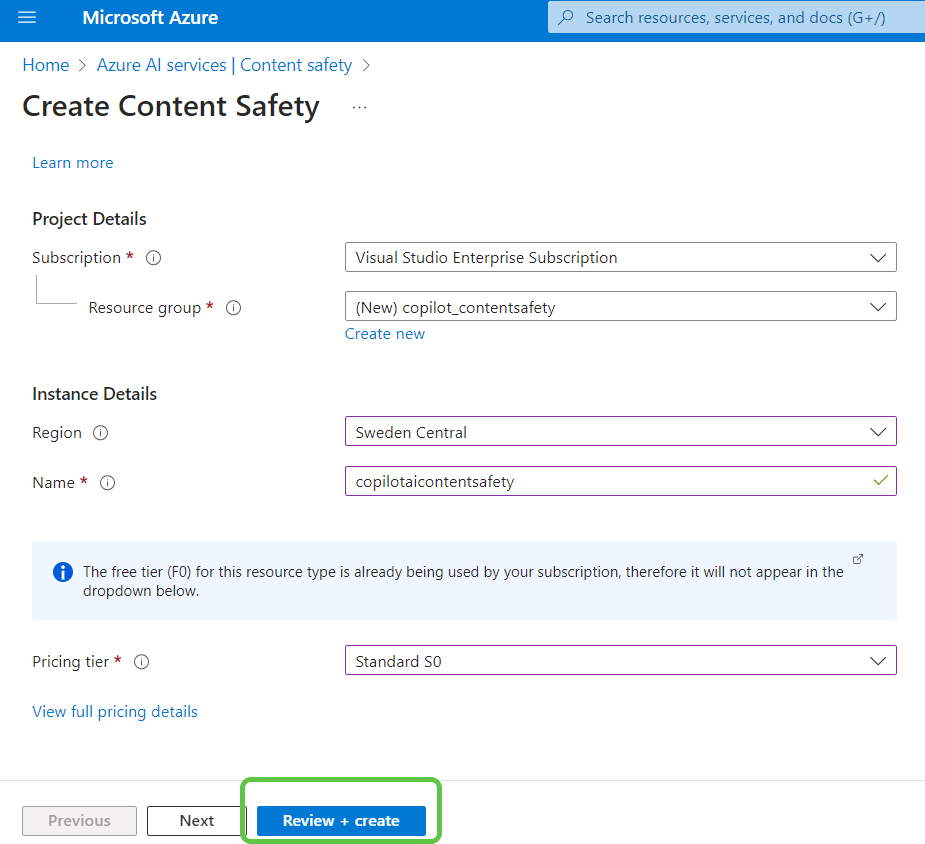

Step 1: Setting Up Azure Content Safety

Create an Azure Content Safety Resource

-

Head over to Azure portal and search for Content Safety

-

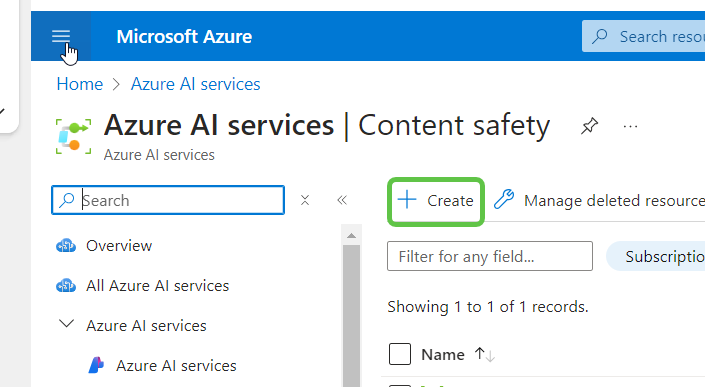

Select Create, which will open the window to add the Content Safety configurations.

-

Specify the Subscription and Instance details for the Content Safety Service. Click on Review and Create.

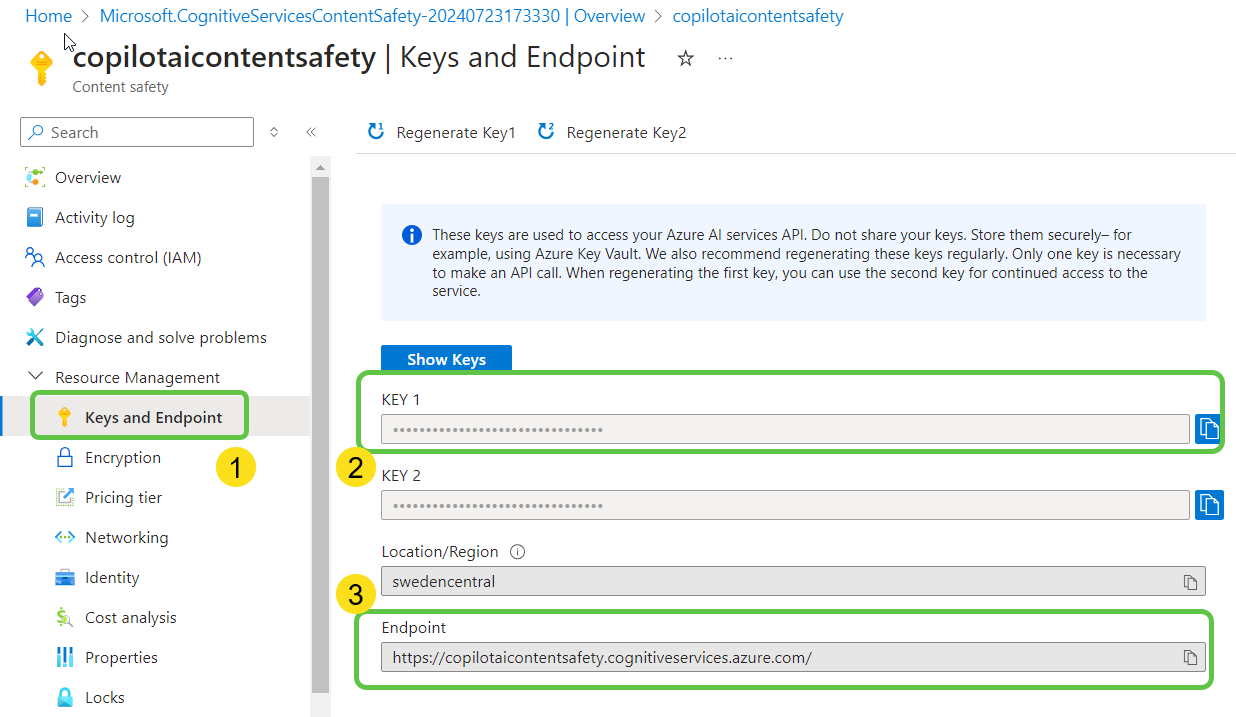

-

From the Keys and Endpoint section of the service, Copy the Endpoint and Key which we will be using in the Copilot.

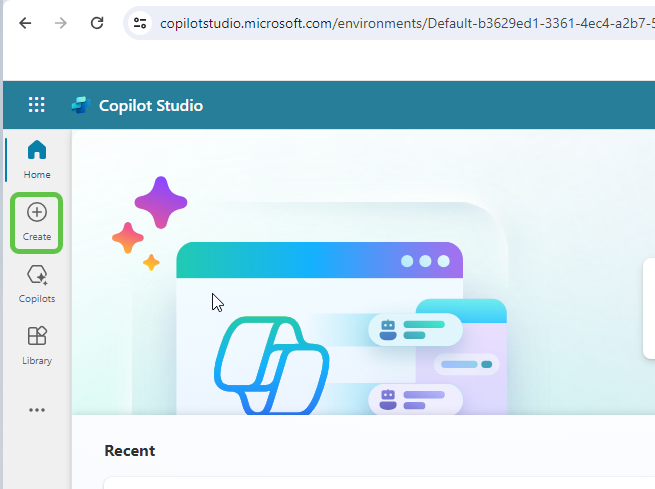

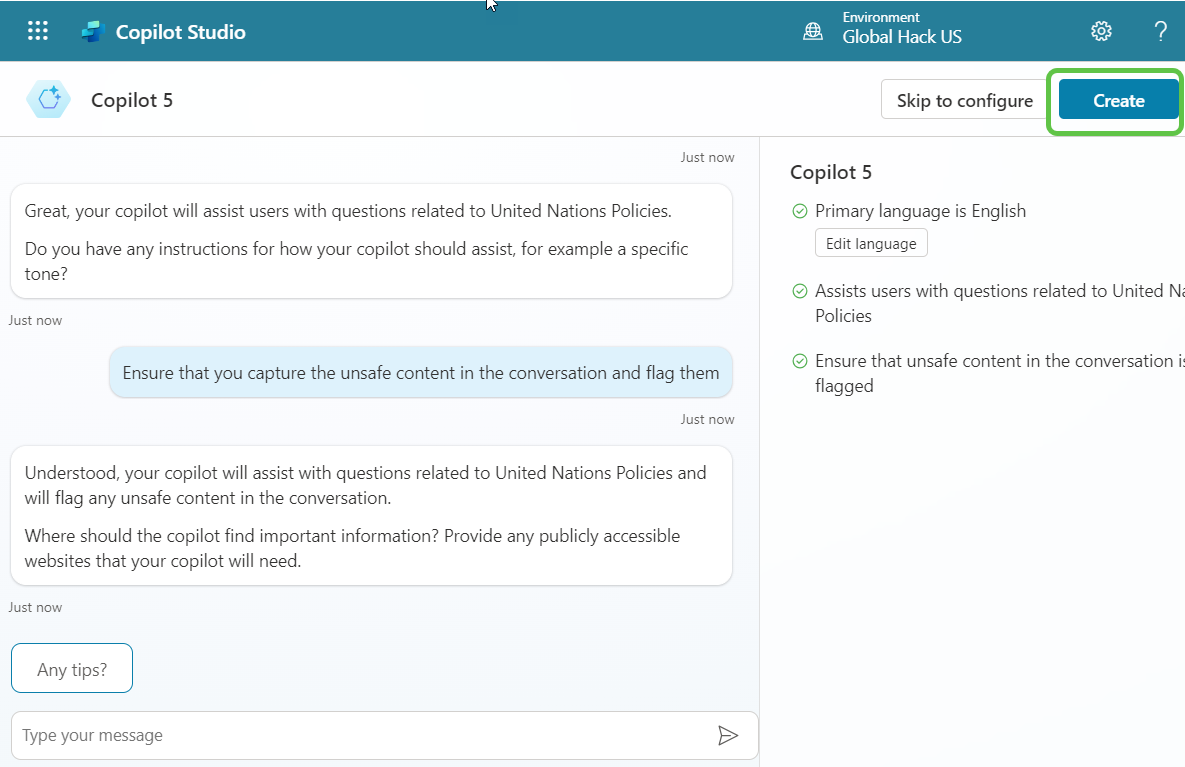

Step 2: Create the Copilot

-

Head over to Copilot Studio and click on Create.

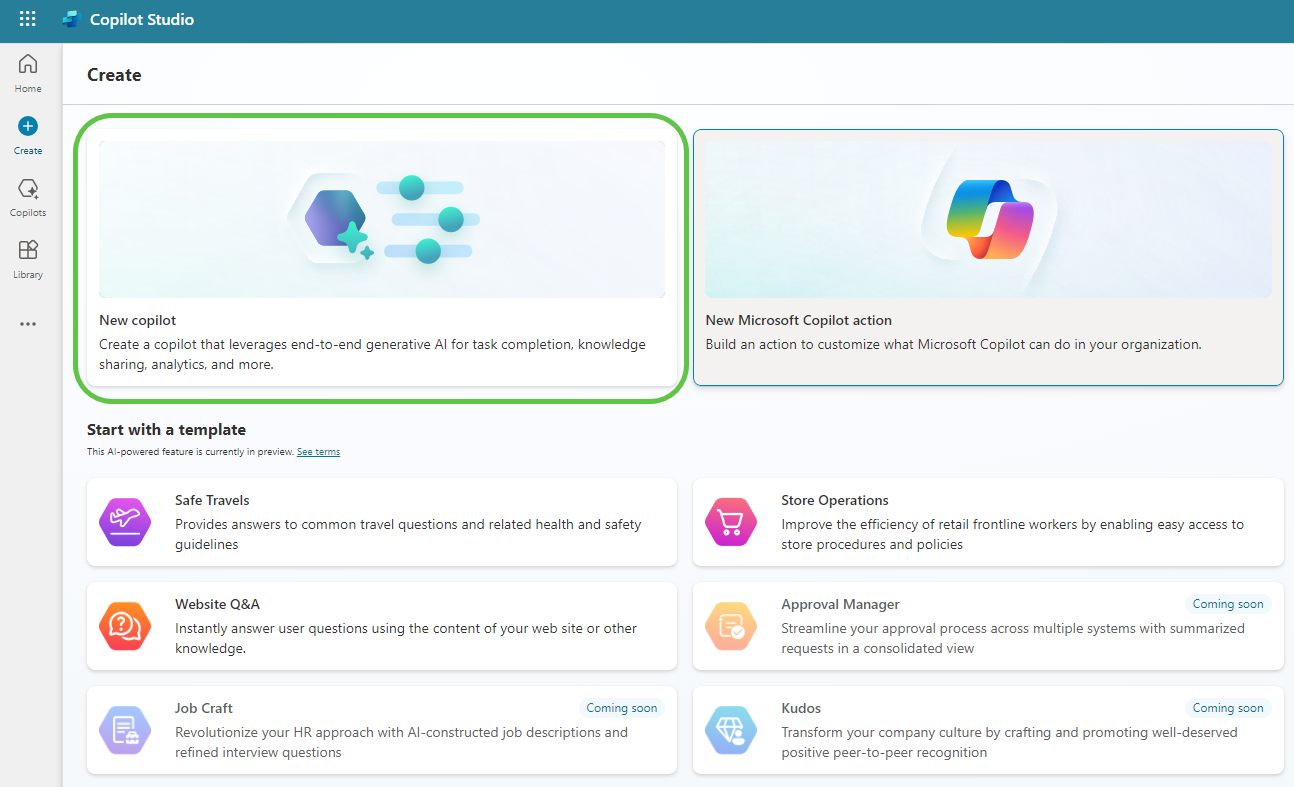

-

Select “New copilot” to create a copilot from scratch.

-

Describe the copilot functionality and provide any specific instructions to the copilot. Once done, click on Create to provision the copilot.

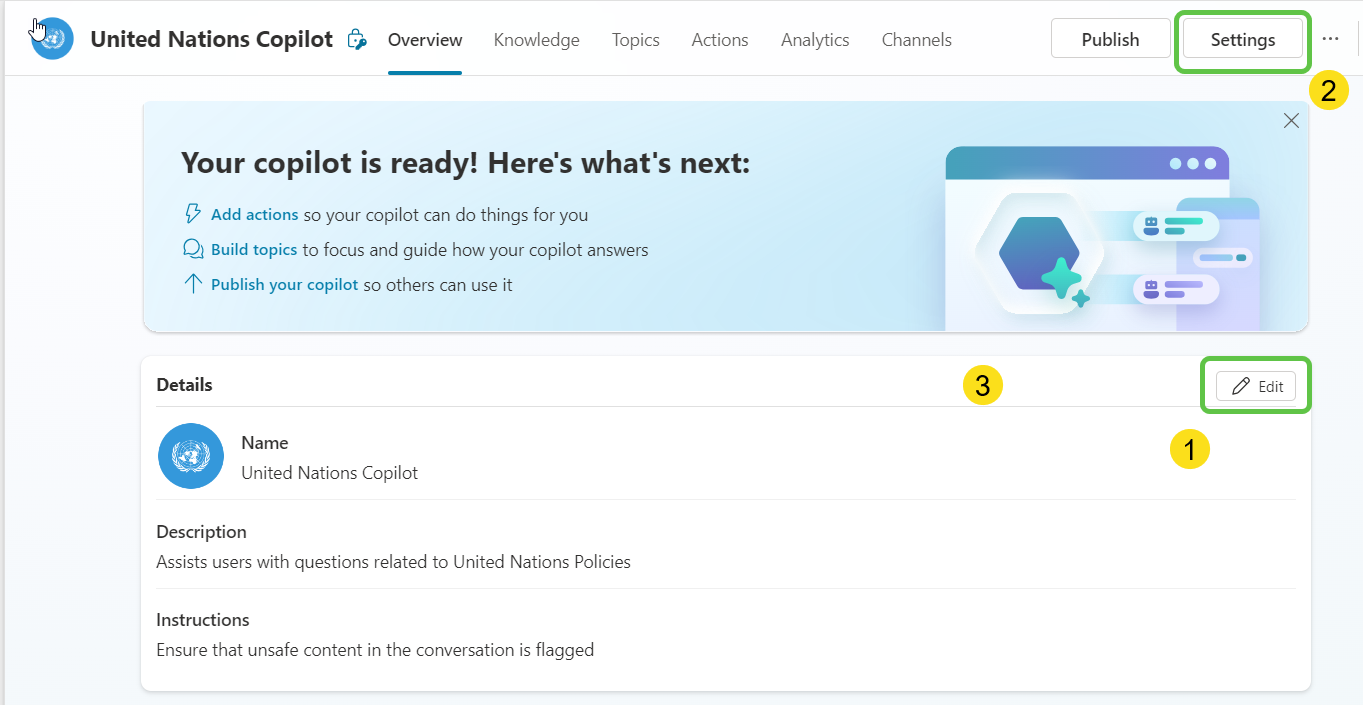

Step 3: Enable Generative Selection of Topics

- Click on Edit, edit the copilot details like name, icon, and description.

-

Click on Settings to enable the Generative selection of topics so that without relying on triggers, the topics will be auto-selected based on user conversation resulting in a much smoother user experience.

- Click on Generative AI.

- Select Generative (preview).

- Click on Save to update the settings.

- Click on Close icon to go back to the home page of this custom copilot.

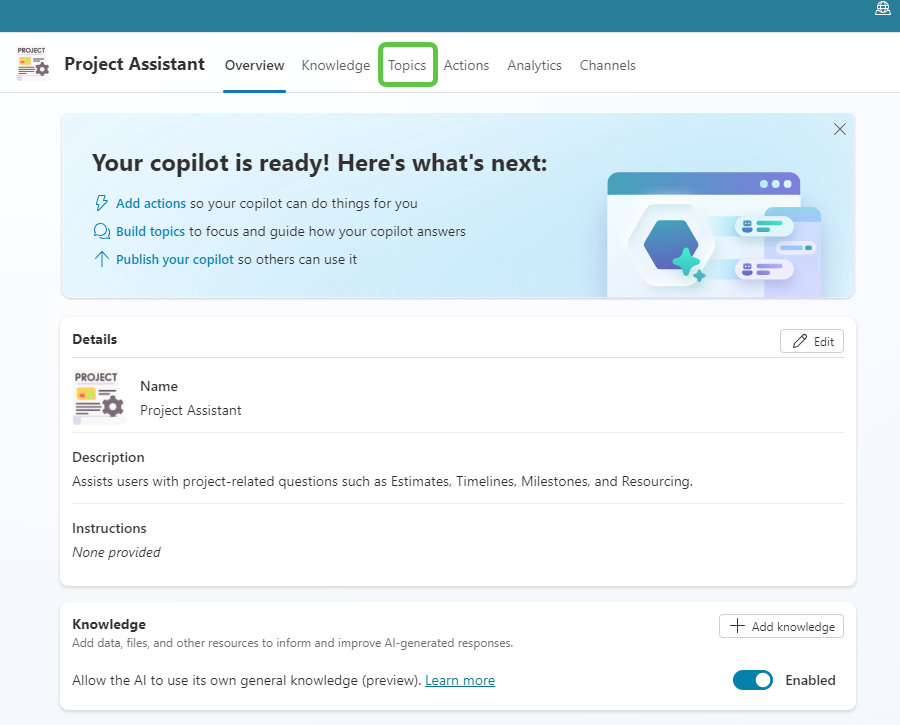

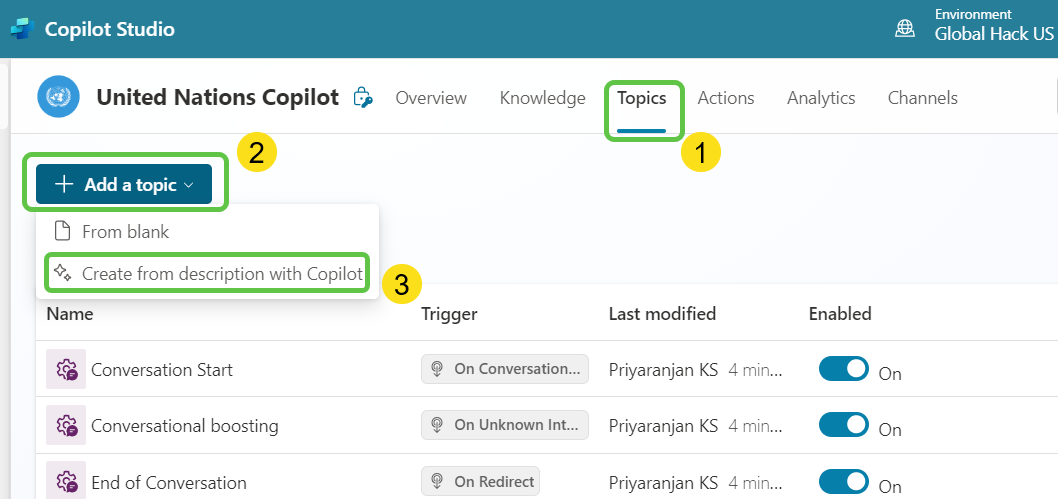

Step 4: Create Topics

- Click on Topics from the navigation menu.

-

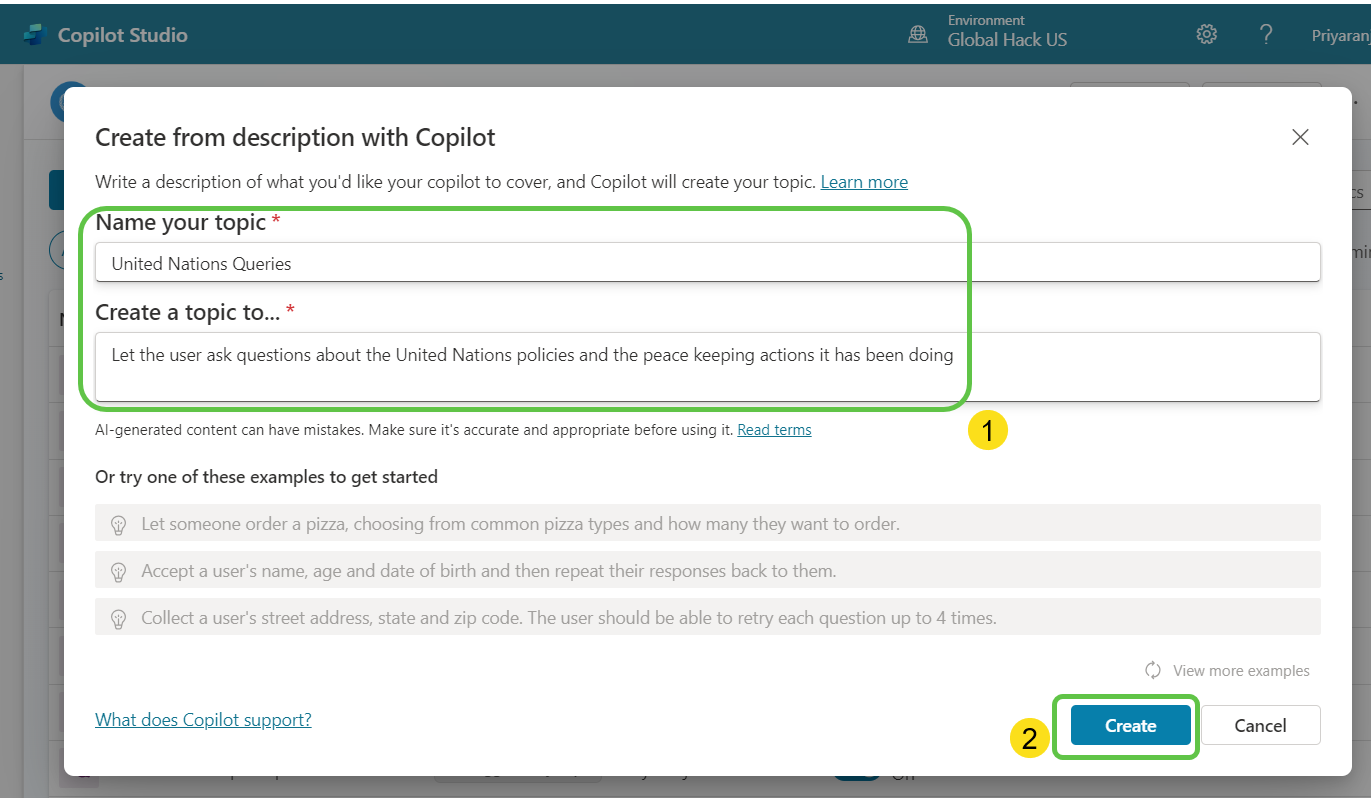

Click on Add a Topic and select Create from description with Copilot.

-

Provide the topic description details and click on Create, which will provision the topic skeleton based on the provided description.

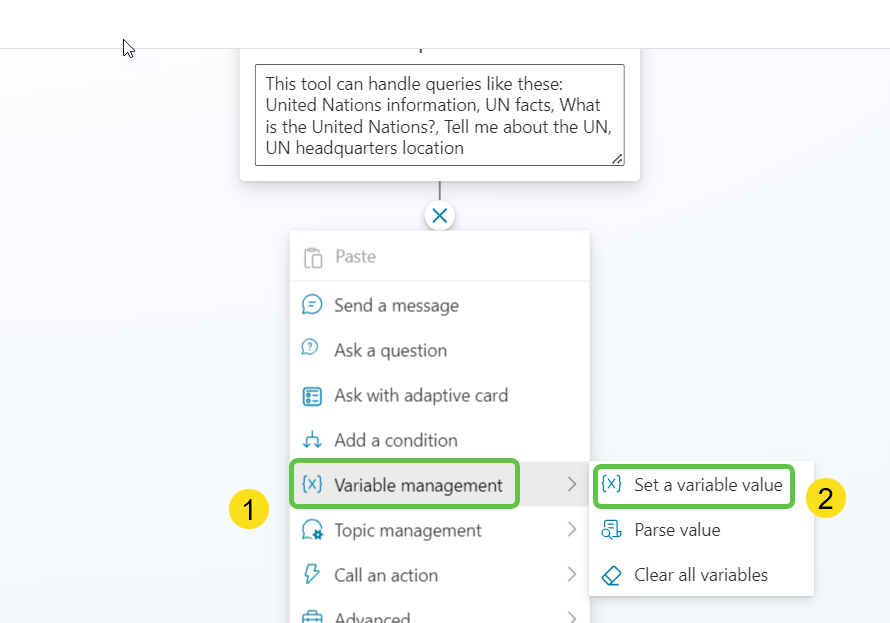

- Add a variable to store the user query:

-

From variable management, select Set a variable value.

-

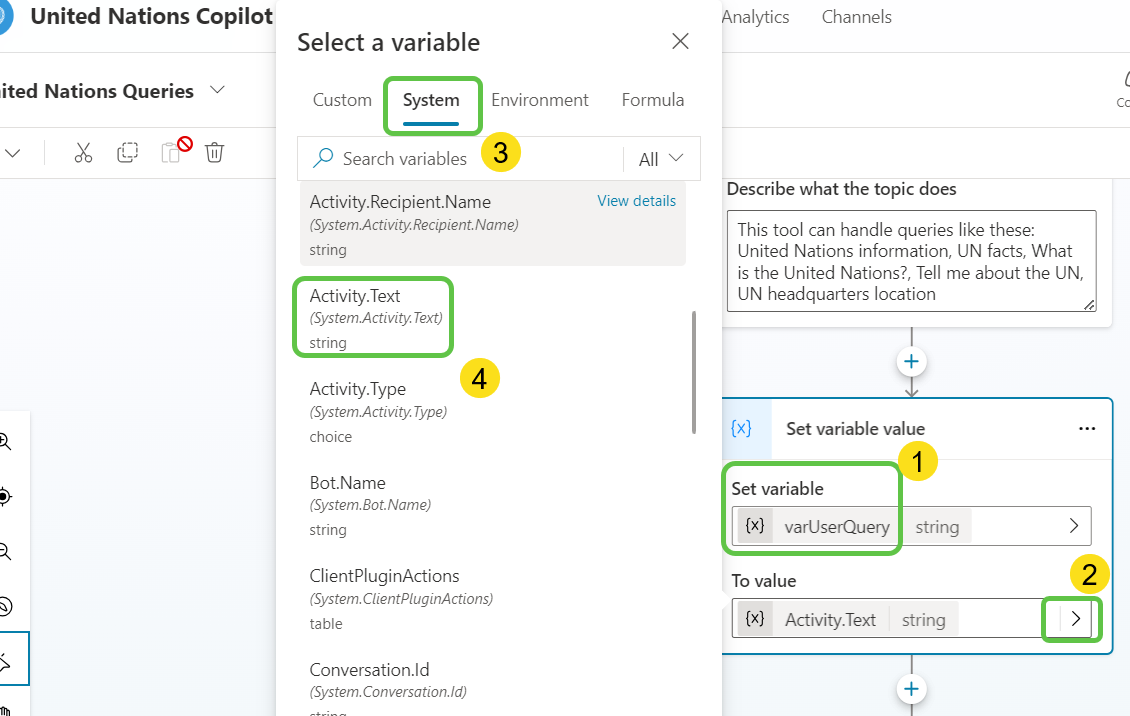

Configure the variable:

- Set the variable name to

varUserQuery. - Click the arrow next to the To Value.

- Select the System tab.

- Set the value to

Activity.Text.

- Set the variable name to

-

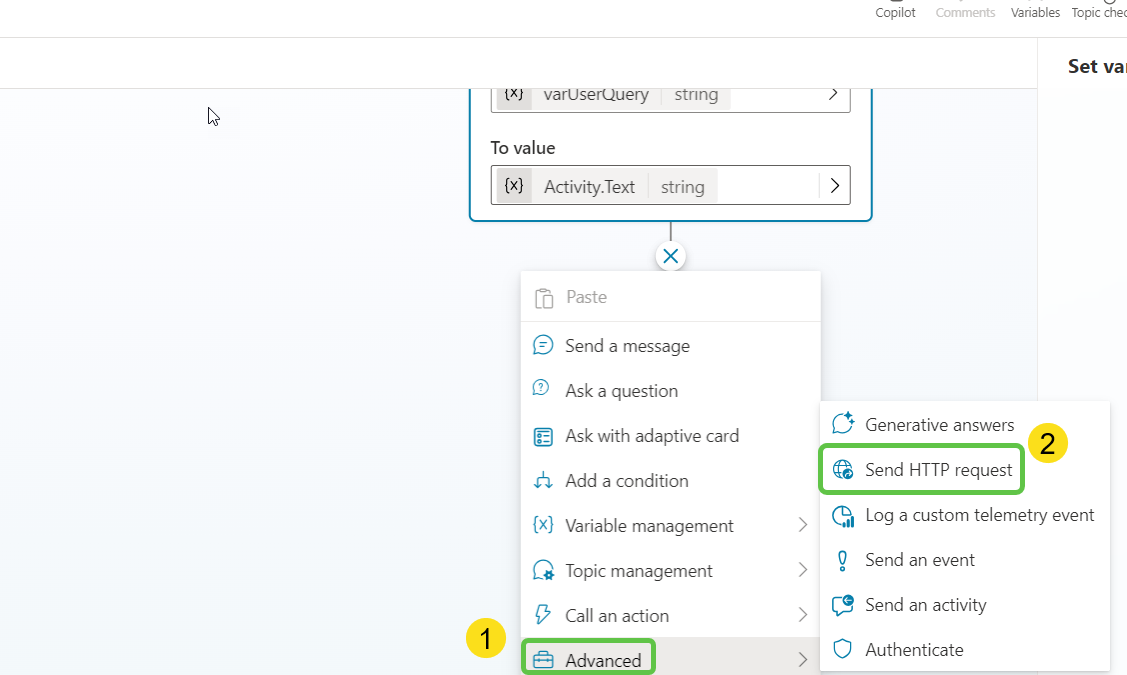

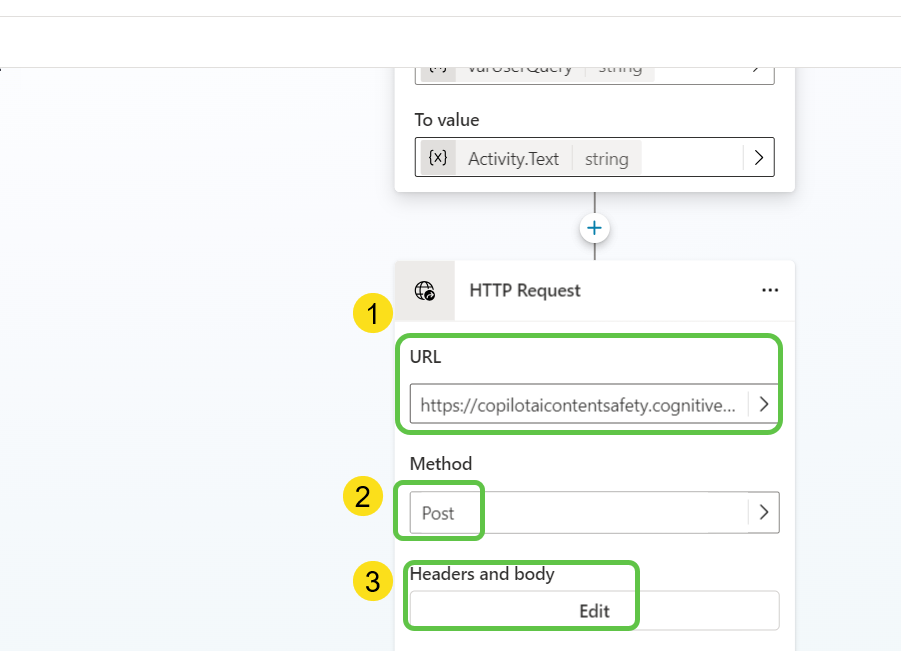

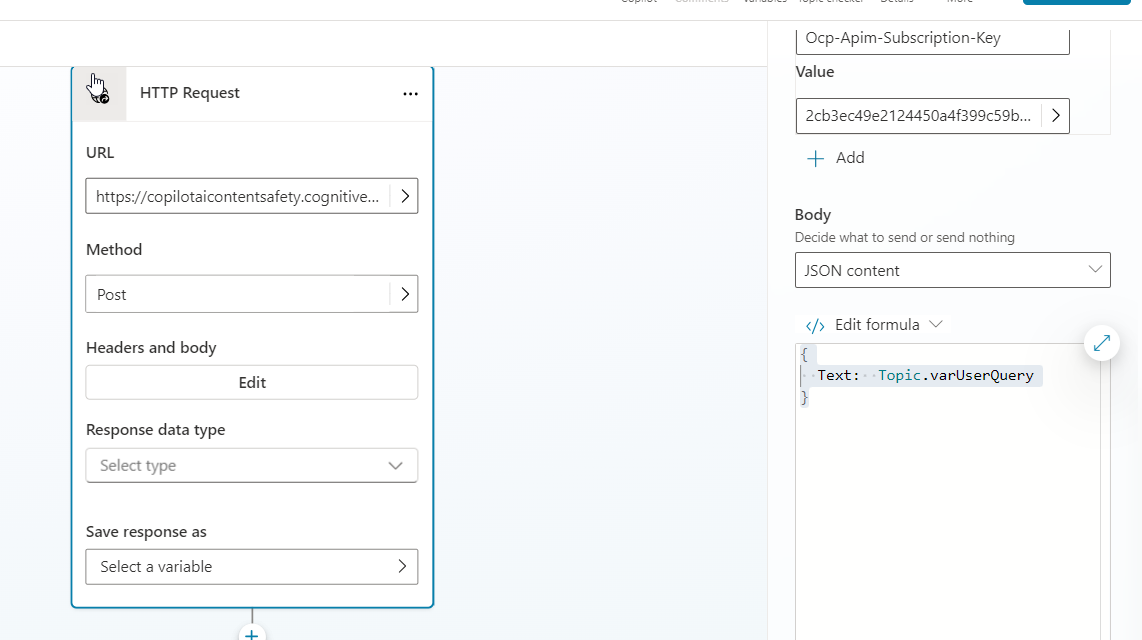

- Add the step to invoke the Azure Content Safety API to validate the user query and check for any unsafe content:

- From the advanced tab, select Send HTTP Request.

- From the advanced tab, select Send HTTP Request.

- Configure the HTTP Action:

- Paste the content safety URL saved from Azure Portal.

- Select the Method as POST.

-

Click on Edit in the Headers and body section.

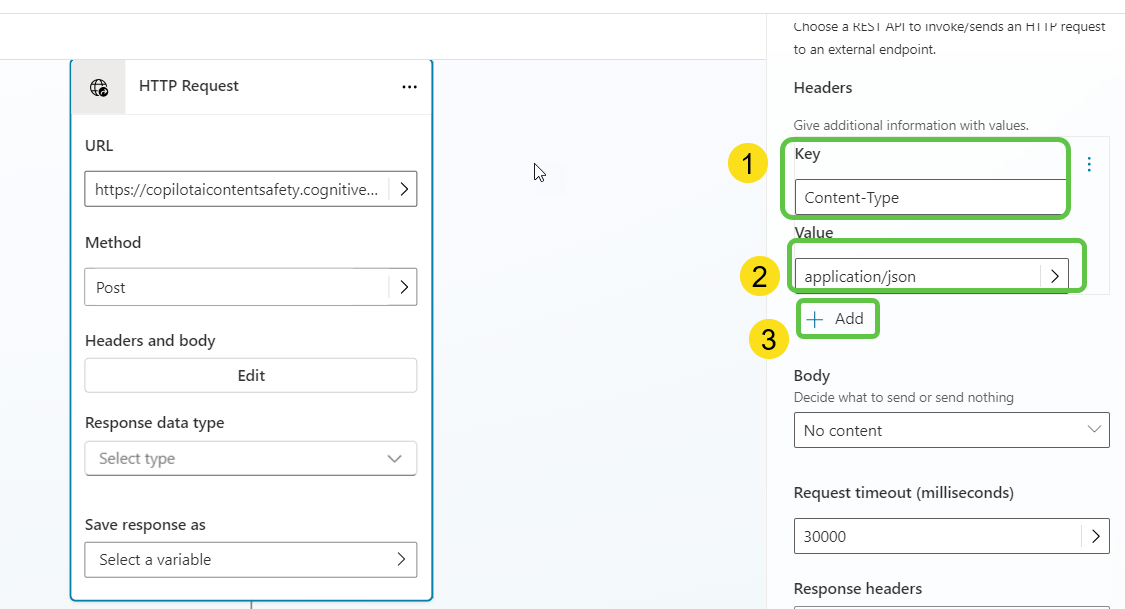

- Add 2 headers:

- Set the Key as

Content-Type. - Set the Value as

application/json. -

Click on Add.

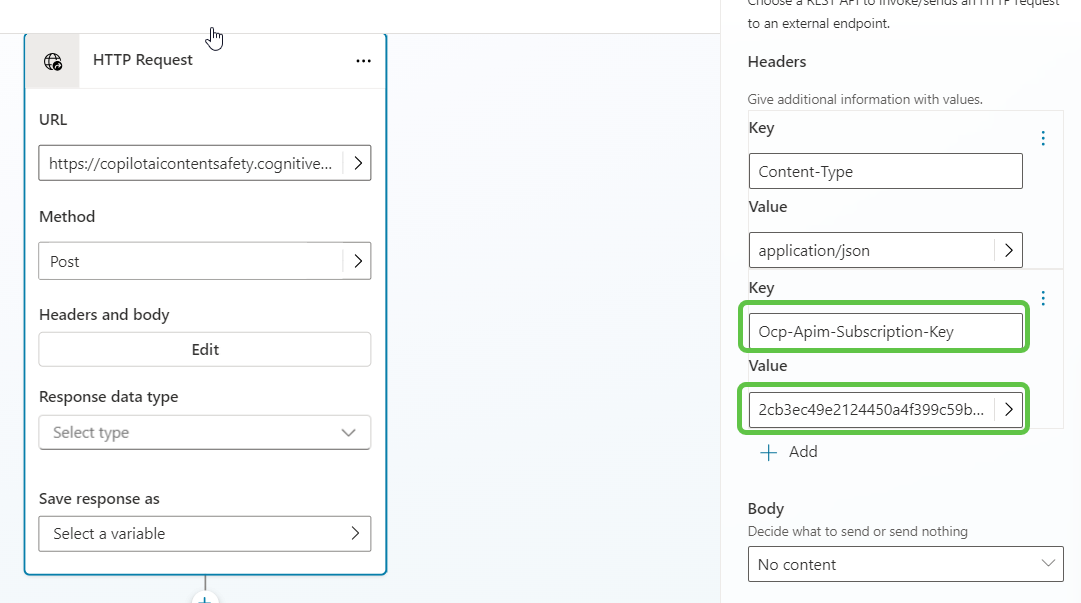

- Set the Key as

Ocp-Apim-Subscription-Key. - Set the Value to the content safety endpoint key saved from Azure.

- Set the Key as

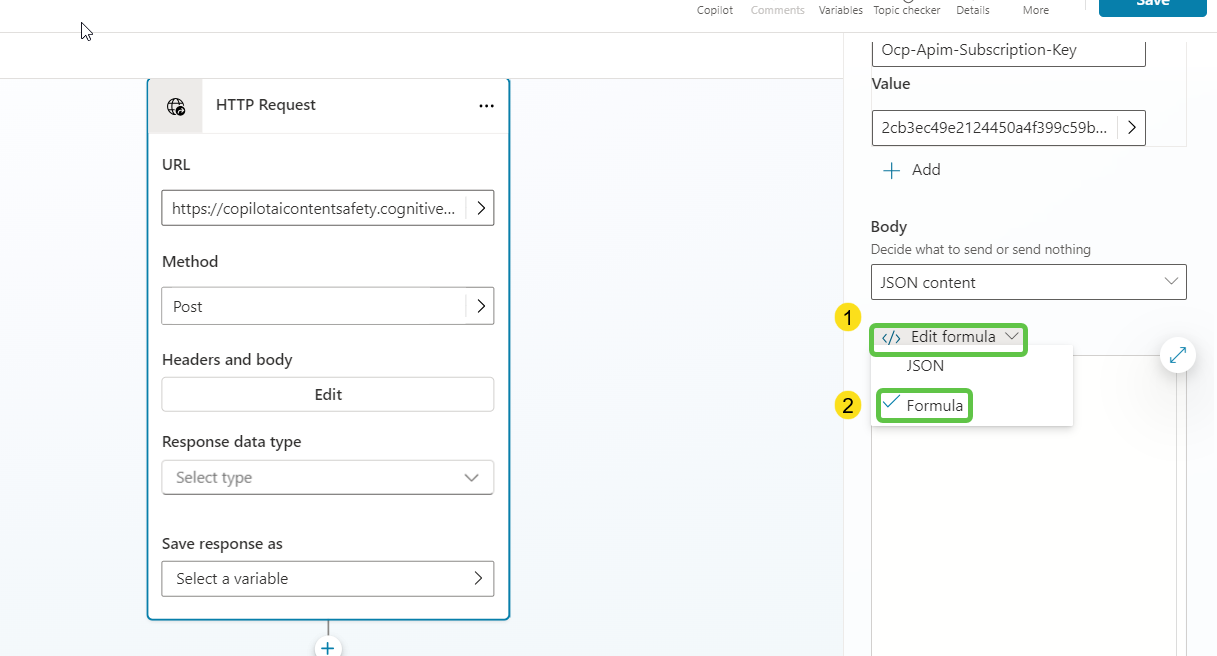

- Update the request body:

- Select JSON Content from the Body section.

-

Select Edit Formula and click on Formula to add the user query variable.

- Add the below expression to the body tag:

{ "Text": Topic.varUserQuery }

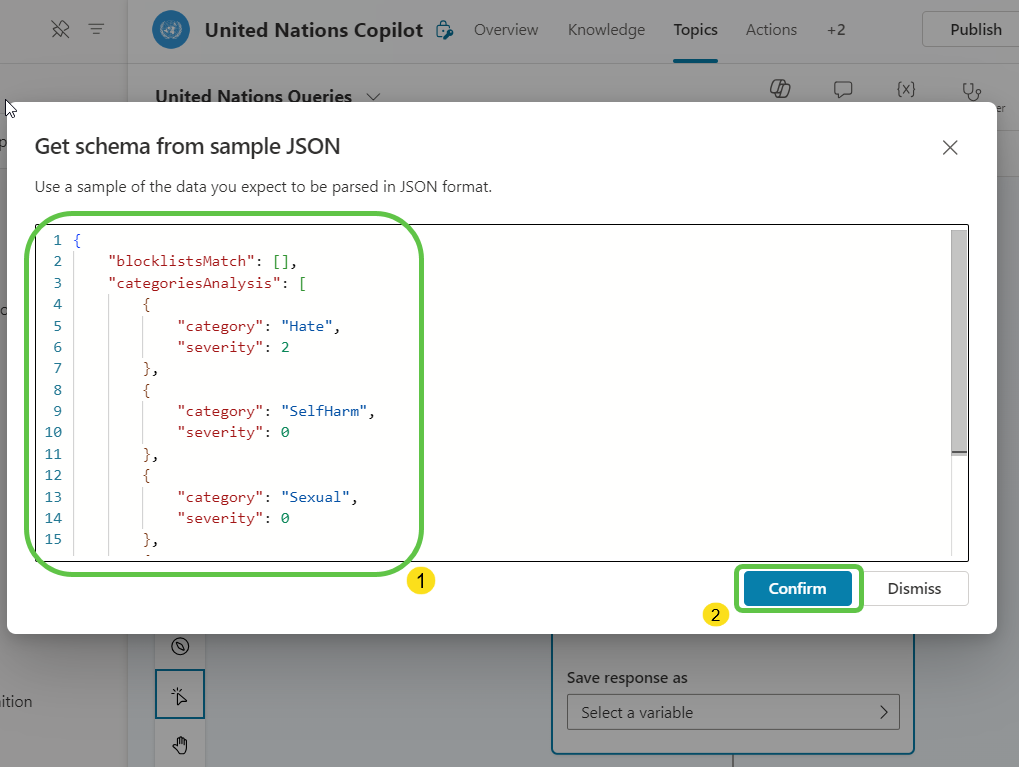

- Define the response data type:

- Select From sample data to provide the sample output.

- Paste the below schema in the pop-up and click on Confirm:

{ "blocklistsMatch": [], "categoriesAnalysis": [ { "category": "Hate", "severity": 2 }, { "category": "SelfHarm", "severity": 0 }, { "category": "Sexual", "severity": 0 }, { "category": "Violence", "severity": 0 } ] }

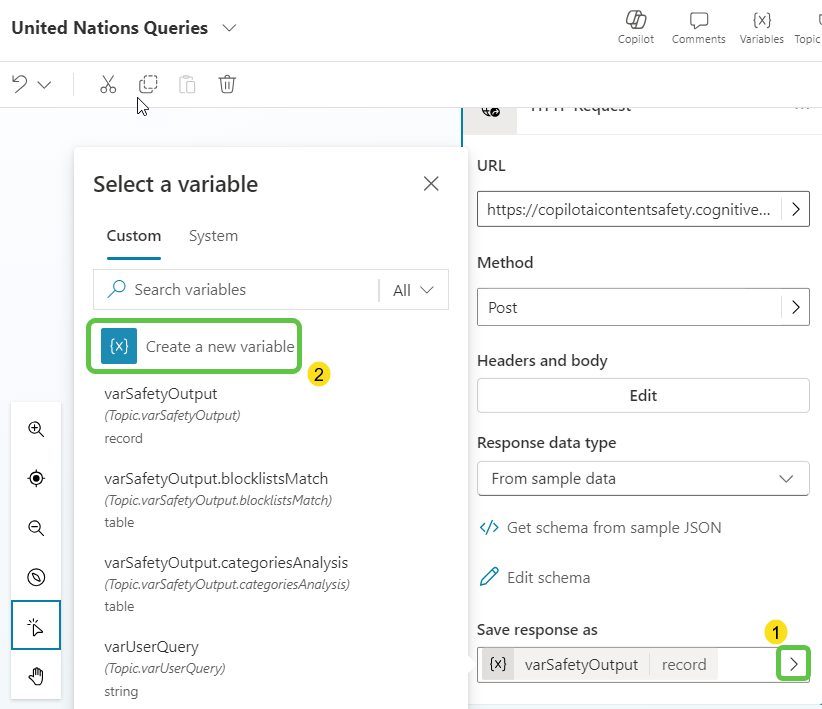

-

Create a new variable to hold the output of the content safety API, named

varSafetyOutput.

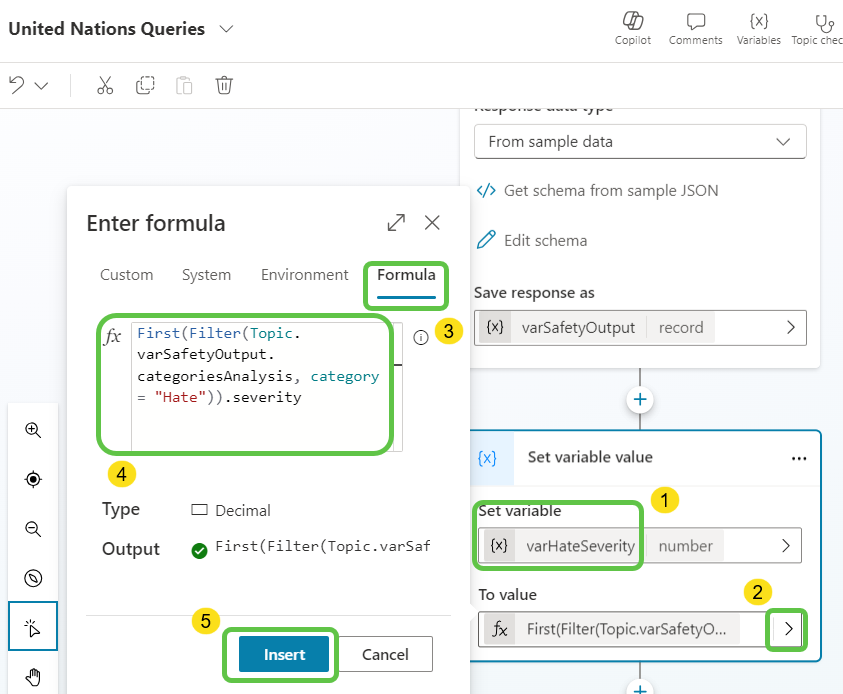

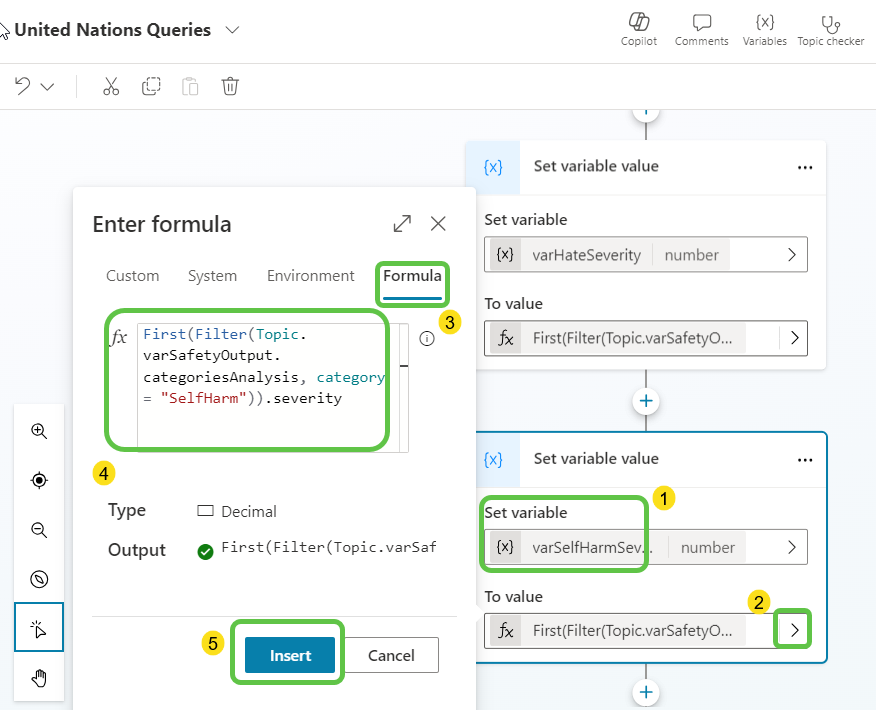

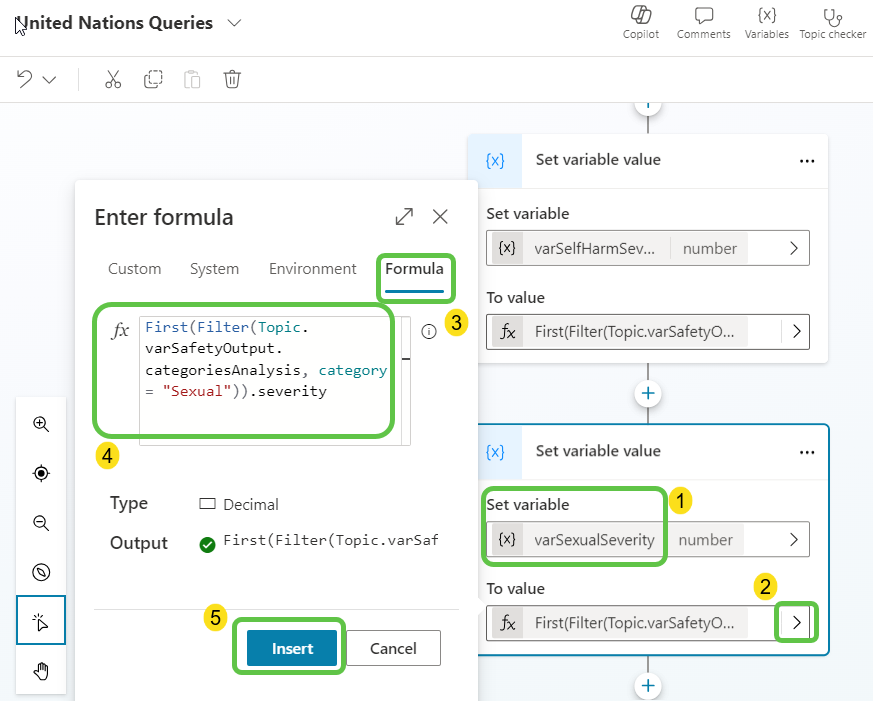

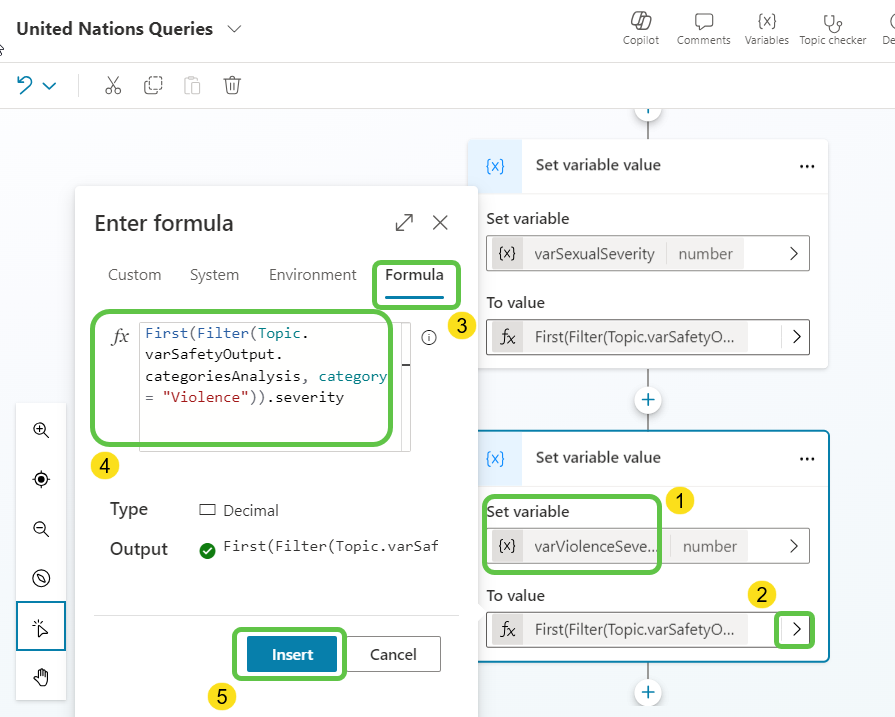

- Declare variables for the content safety ratings:

- Hate Severity:

First(Filter(Topic.varSafetyOutput.categoriesAnalysis, category = "Hate")).severity

- Self Harm Severity:

First(Filter(Topic.varSafetyOutput.categoriesAnalysis, category = "SelfHarm")).severity

- Sexual Severity:

First(Filter(Topic.varSafetyOutput.categoriesAnalysis, category = "Sexual")).severity

- Violence Severity:

First(Filter(Topic.varSafetyOutput.categoriesAnalysis, category = "Violence")).severity

- Hate Severity:

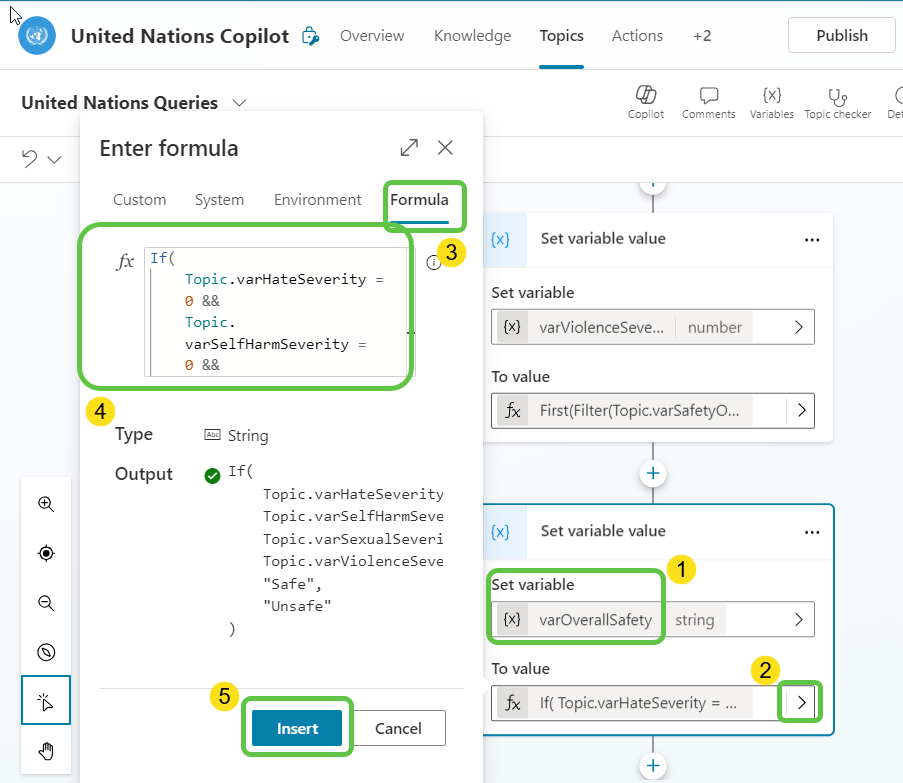

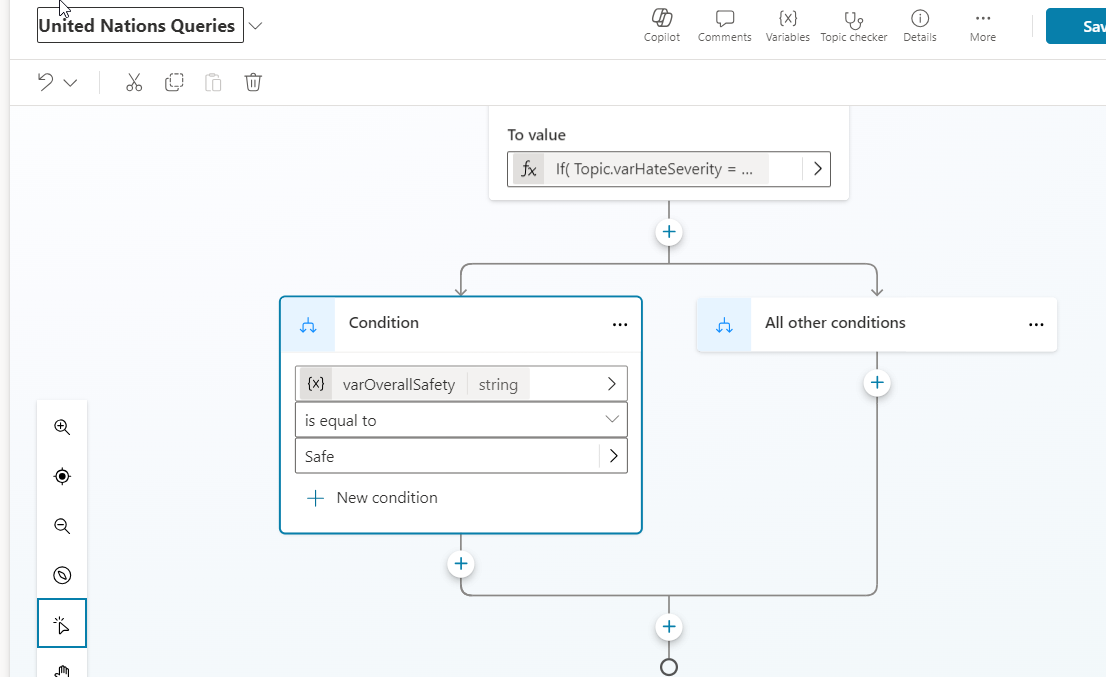

- Create an overall safety variable named

varOverallSafetyto determine if there is any sort of content safety issue:If( Topic.varHateSeverity = 0 && Topic.varSelfHarmSeverity = 0 && Topic.varSexualSeverity = 0 && Topic.varViolenceSeverity = 0, "Safe", "Unsafe" )

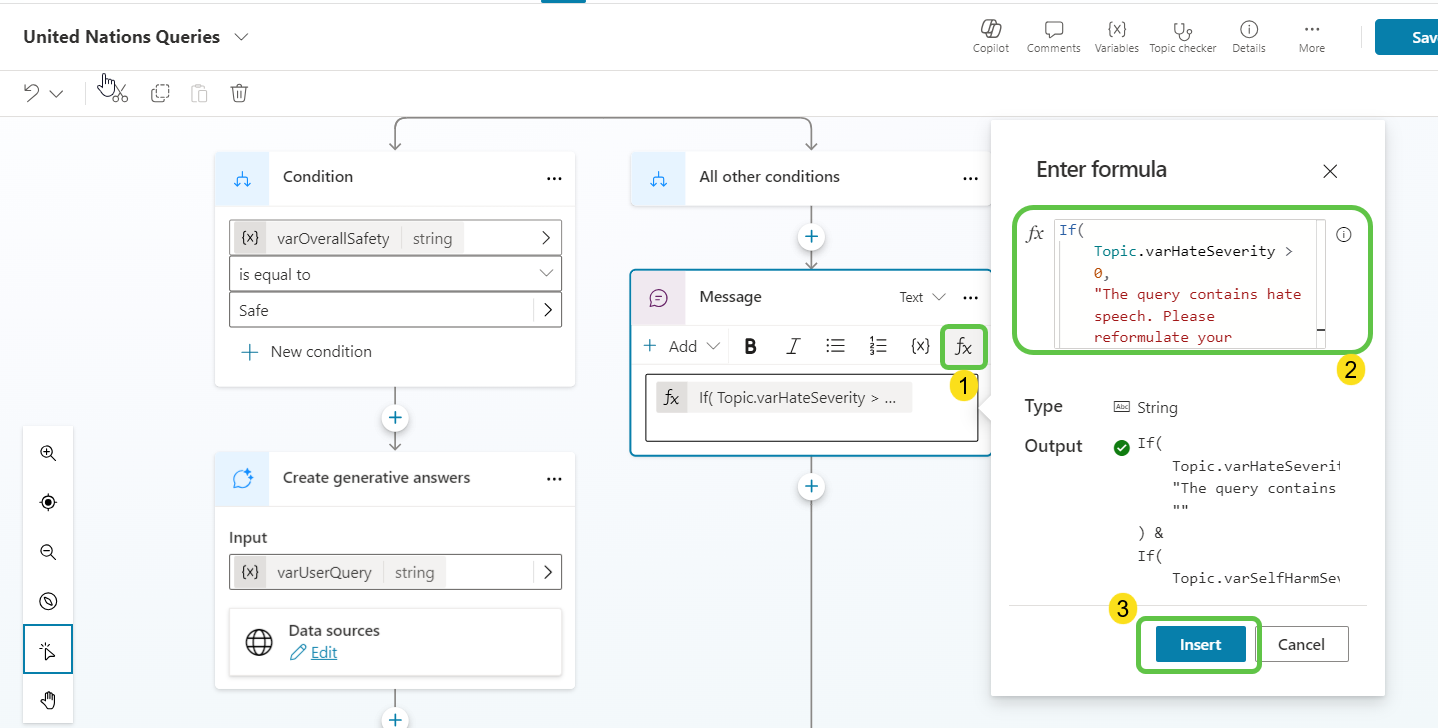

- Check the

varOverallSafetyvariable and create a positive control flow branch:-

Add the condition action.

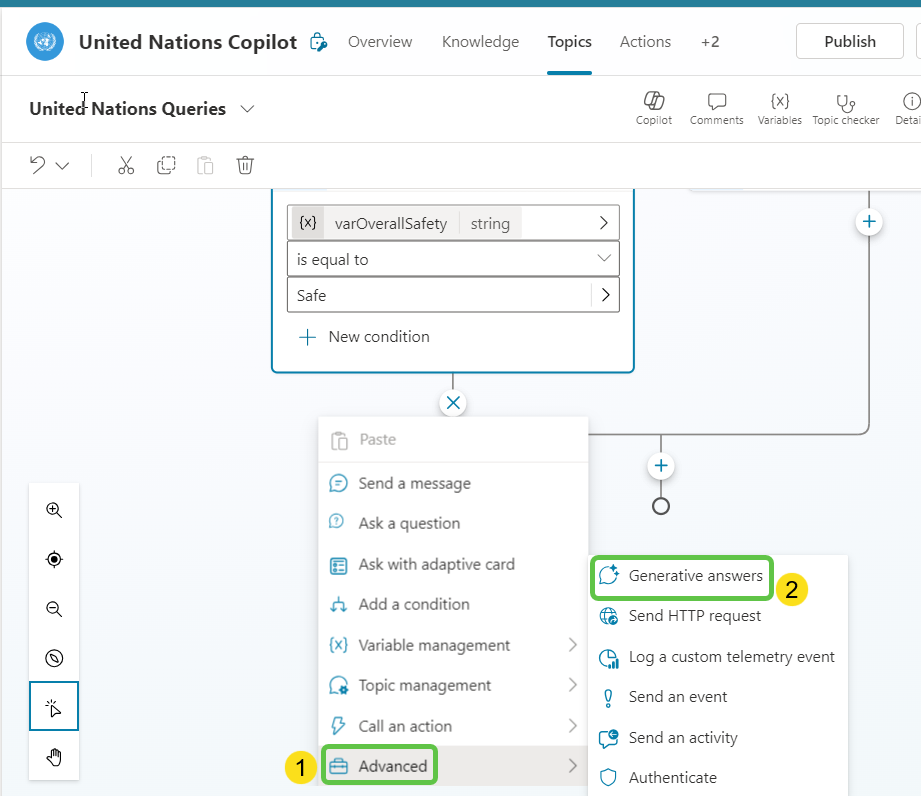

- Add the generative answers node in the positive branch:

- Select Generative Answers.

- Configure the input to use

varUserQuery.

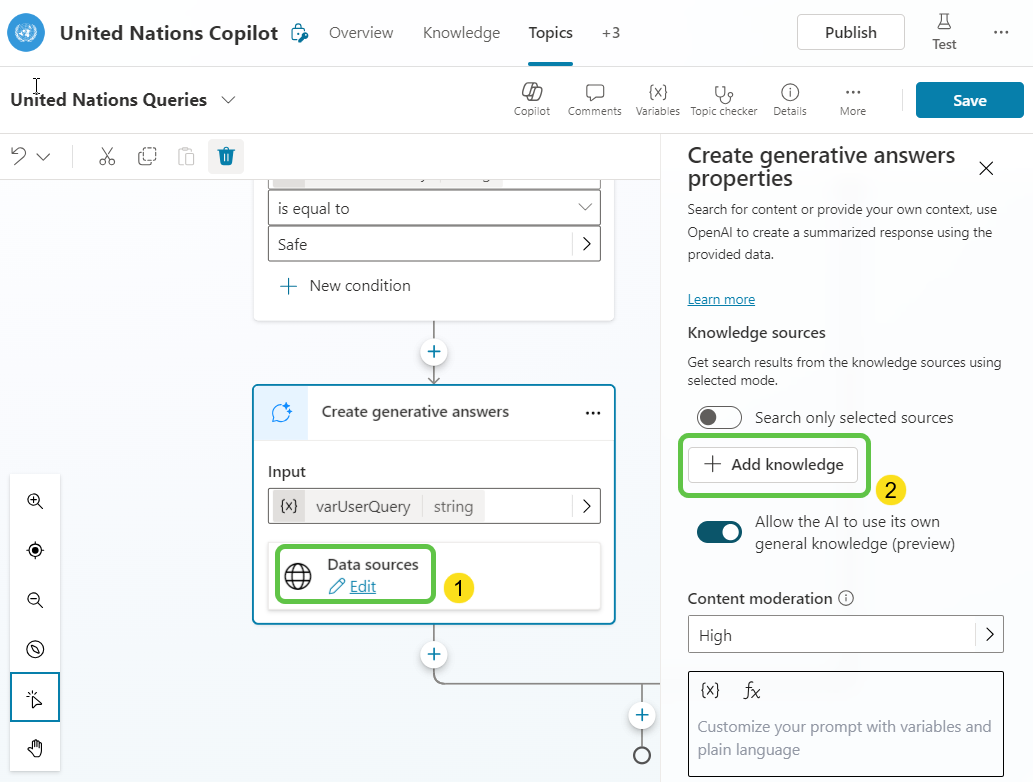

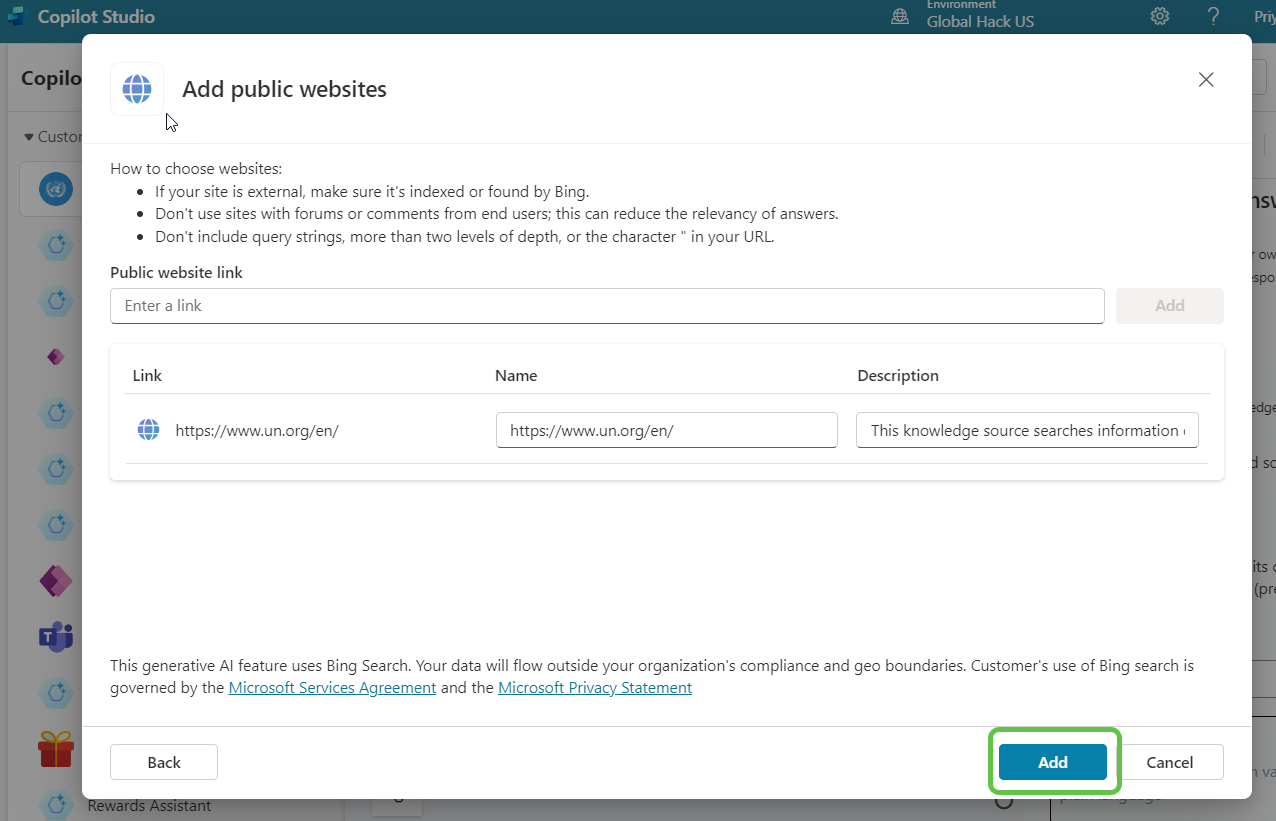

- Edit the data source and click on Add knowledge to enter the United Nations website as the source.

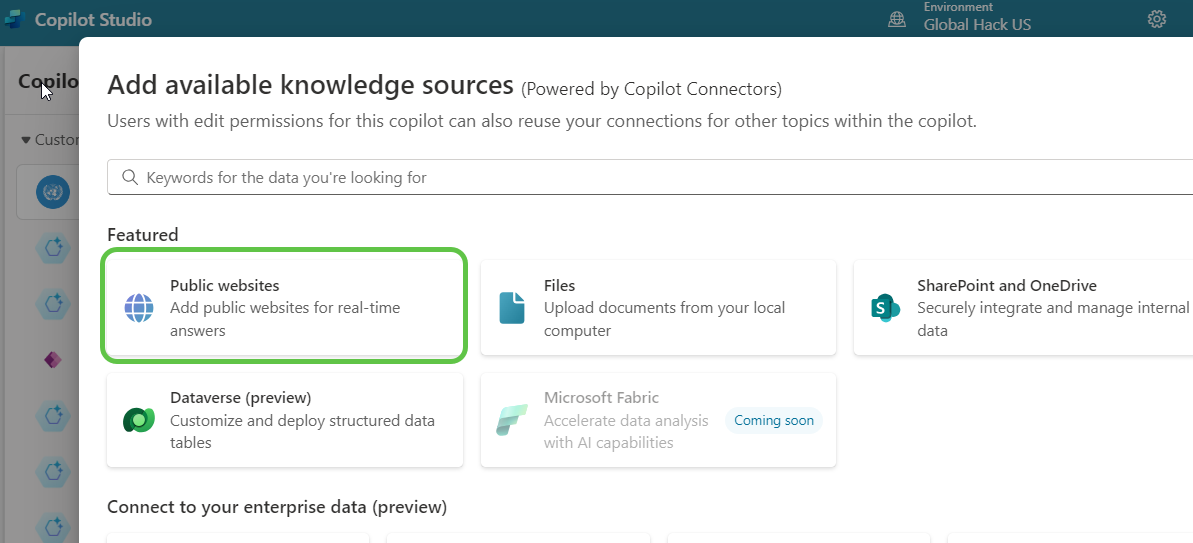

- Select Public Websites as the knowledge source.

- Enter the UN website link and click on Add.

- In the negative flow branch, add the below expression to check the 4 variables and provide appropriate messages to the user:

If( Topic.varHateSeverity > 0, "The query contains hate speech. Please reformulate your question.", "" ) & If( Topic.varSelfHarmSeverity > 0, "The query indicates self-harm. Please seek immediate help or contact a professional.", "" ) & If( Topic.varSexualSeverity > 0, "The query contains inappropriate sexual content. Please rephrase your question.", "" ) & If( Topic.varViolenceSeverity > 0, "The query contains references to violence. Please reformulate your question.", "" )

-

Thus we have completed the configuration of the copilot and have ensured that proper content safety is checked before generating the contextual answers from the UN website.

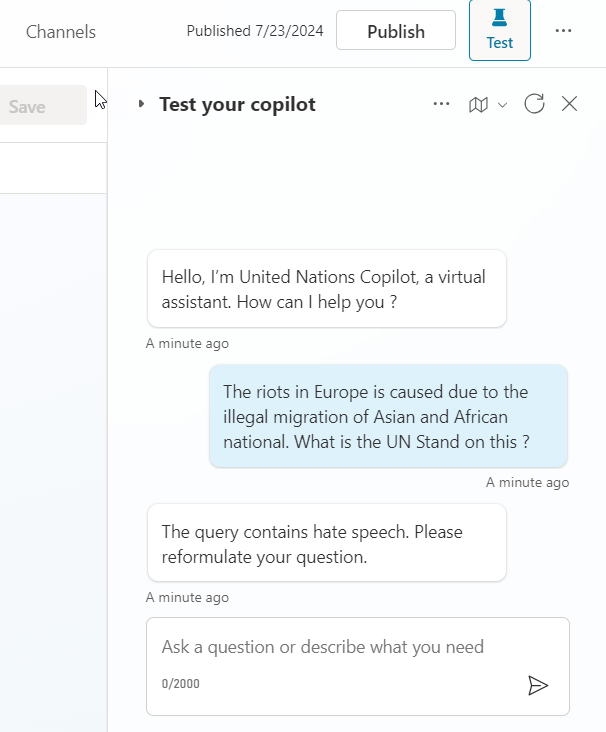

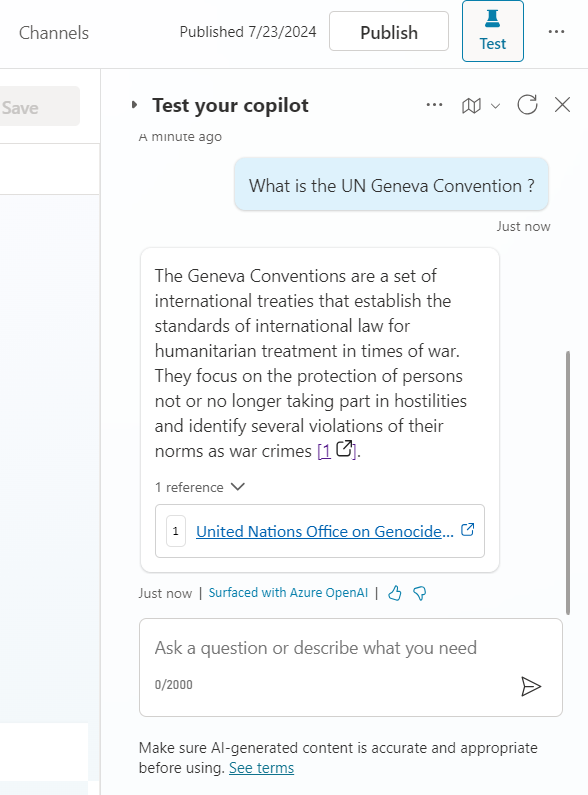

Test the Copilot

-

Test the copilot by triggering the conversation with a question. You can see that the Azure Content Safety API has detected hate speech and has given the custom message back to the user to reformulate the question.

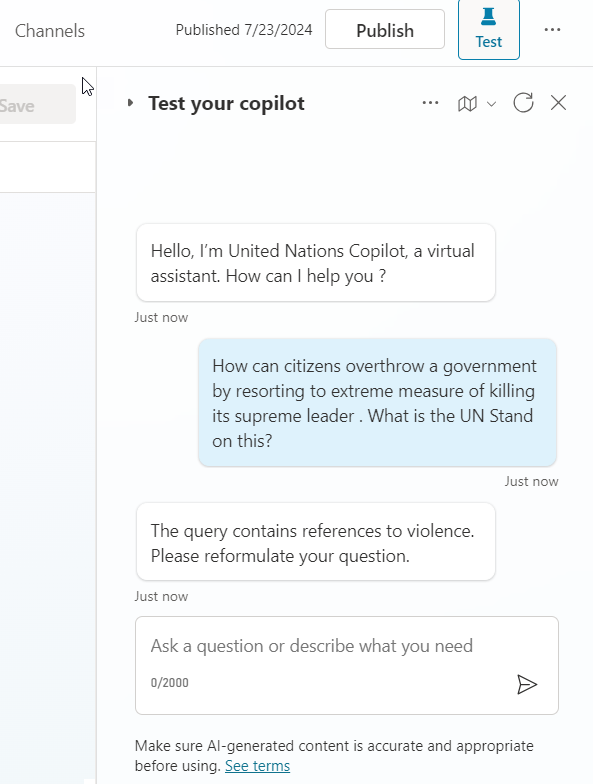

-

Ask another question. You can see that this time the Azure Content Safety has detected violence in the user query and has asked to reformulate the question.

-

Ask a content safe question. You can see that the copilot fetches the accurate information along with citation links from the UN website using its generative AI capabilities.

Conclusion

In this blog, we demonstrated how to create a Copilot that uses Azure Content Safety to filter user inputs against harmful content categories and queries the United Nations website for safe user inputs. This approach ensures a secure and informative interaction with the Copilot, providing accurate information while maintaining user safety. By following these steps, you can build similar solutions that integrate content safety checks with generative AI capabilities to deliver valuable and secure user experiences.